Product Walkthrough: A Look Inside Pillar’s AI Security Platform

Unveiling Pillar Security’s AI Platform: A Comprehensive Walkthrough for Secure AI Systems

The rapid integration of Artificial Intelligence (AI) across industries presents unprecedented opportunities, yet it simultaneously introduces a new frontier of cybersecurity challenges. As AI systems become more sophisticated and deeply embedded in critical operations, ensuring their trustworthiness and integrity is paramount. Organizations are grappling with unique threat vectors targeting AI models, data, and infrastructure. Understanding how to proactively secure these complex systems is no longer optional; it’s a strategic imperative.

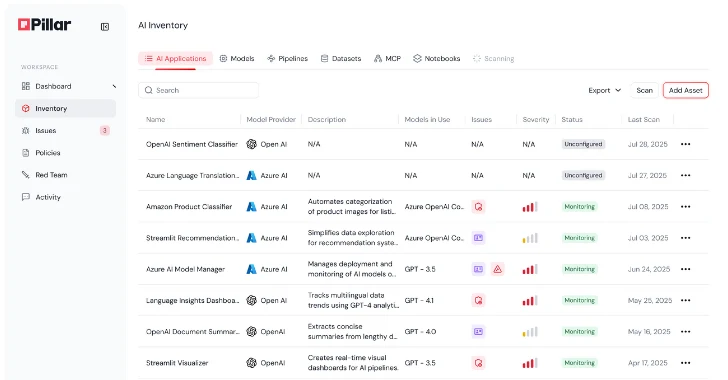

This article provides an in-depth product walkthrough of Pillar Security’s innovative AI security platform, offering a clear perspective on their holistic approach to defending AI throughout its lifecycle. Leveraging insights from The Hacker News’ report, we will explore how Pillar Security is redefining trust in AI systems by addressing critical security gaps from development to deployment.

The Evolving Landscape of AI Security Threats

Traditional cybersecurity measures, while foundational, often fall short against AI-specific threats. These attacks can range from data poisoning and model evasion to intellectual property theft and adversarial attacks. Imagine a scenario where a seemingly benign input could manipulate a critical AI-powered decision, or where an attacker could extract sensitive training data from a deployed model. These aren’t theoretical concerns; documented instances like CVE-2022-26157 (related to TensorFlow serving) highlight the real-world implications of insecure AI deployments.

Securing AI requires a paradigm shift, moving beyond perimeter defenses to encompass the very core of AI development and operation. This includes validating data integrity, ensuring model robustness, and protecting against novel adversarial techniques.

Pillar Security’s Holistic Approach to AI Trust

Pillar Security distinguishes itself by adopting a comprehensive strategy that spans the entire software development and deployment lifecycle (SDLC) for AI systems. Their core objective is to establish and maintain trust in AI, recognizing that security must be integrated from the ground up, not merely as an afterthought. This holistic approach is fundamental to their platform’s design.

The platform introduces novel methods for identifying and mitigating AI-specific threats, moving beyond traditional vulnerability scanning to address the unique attack surface presented by machine learning models and their underlying data. This proactive stance aims to detect and neutralize threats at their earliest stages.

Key Pillars of the Platform

While the full scope of Pillar Security’s platform is extensive, several key areas stand out in their mission to secure AI:

- AI-Native Threat Detection: Unlike conventional security tools, Pillar Security is engineered to understand the nuances of AI threats. This includes detecting subtle anomalies that could indicate data poisoning, model drift, or adversarial manipulation.

- Full Lifecycle Coverage: The platform integrates security checks and controls across the entire AI pipeline – from data ingestion and model training to deployment and continuous monitoring. This ensures that vulnerabilities are identified and addressed at every stage.

- Building Trust in AI Systems: Beyond mere threat detection, Pillar Security focuses on bolstering the overall trustworthiness of AI. This involves validating model integrity, ensuring explainability where possible, and maintaining resilience against sophisticated attacks.

Remediation Actions and Best Practices for AI Security

Proactive measures are essential for securing AI infrastructure. Organizations can implement several best practices and leverage tools to enhance their AI security posture:

- Secure Data Pipelines: Implement robust data validation, anonymization, and access control mechanisms for all training and inference data. Ensure data lineage and integrity.

- Model Hardening: Employ adversarial training techniques, differential privacy, and regular model audits to improve resilience against adversarial attacks and data inference.

- Integrate Security into MLOps: Embed security checks and gates within your Machine Learning Operations (MLOps) pipelines. Automate vulnerability scanning for libraries and frameworks.

- Continuous Monitoring: Deploy anomaly detection systems to monitor AI model behavior in production, looking for deviations that could indicate a compromise or attack.

- Supply Chain Security: Vet third-party AI models and components for known vulnerabilities. An example of a supply chain risk in AI can be seen in issues like CVE-2021-39294, concerning a universal deserialization vulnerability in PyTorch.

Tools for AI Security and Validation

| Tool Name | Purpose | Link |

|---|---|---|

| IBM Adversarial Robustness Toolbox (ART) | A Python library for machine learning security aimed at enabling developers and researchers to defend against adversarial attacks. | https://github.com/Trusted-AI/adversarial-robustness-toolbox |

| Microsoft Counterfit | An open-source tool for assessing the security of machine learning systems. It helps generate adversarial examples against AI models. | https://github.com/Azure/Counterfit |

| OWASP Top 10 for LLM | Provides a document outlining the top 10 most critical security risks specific to Large Language Models. | https://llm.owasp.org/llm-top-10-2023/ |

| OpenSCAD (AI Security Test Generator) | While not a direct threat tool, SCAD principles aid in generating complex test cases for AI robustness testing. | https://openscad.org/ |

Conclusion

Pillar Security’s platform represents a significant step forward in securing the AI revolution. By focusing on a holistic, lifecycle-oriented approach to AI security, they are empowering organizations to build, deploy, and operate trusted AI systems with greater confidence. As AI continues to evolve and integrate into core business functions, platforms like Pillar Security’s will become indispensable tools for cybersecurity professionals navigating this complex and critical domain.

The imperative to secure AI is clear. By understanding the unique challenges and leveraging specialized solutions, organizations can unlock the full potential of AI while effectively mitigating its inherent risks.