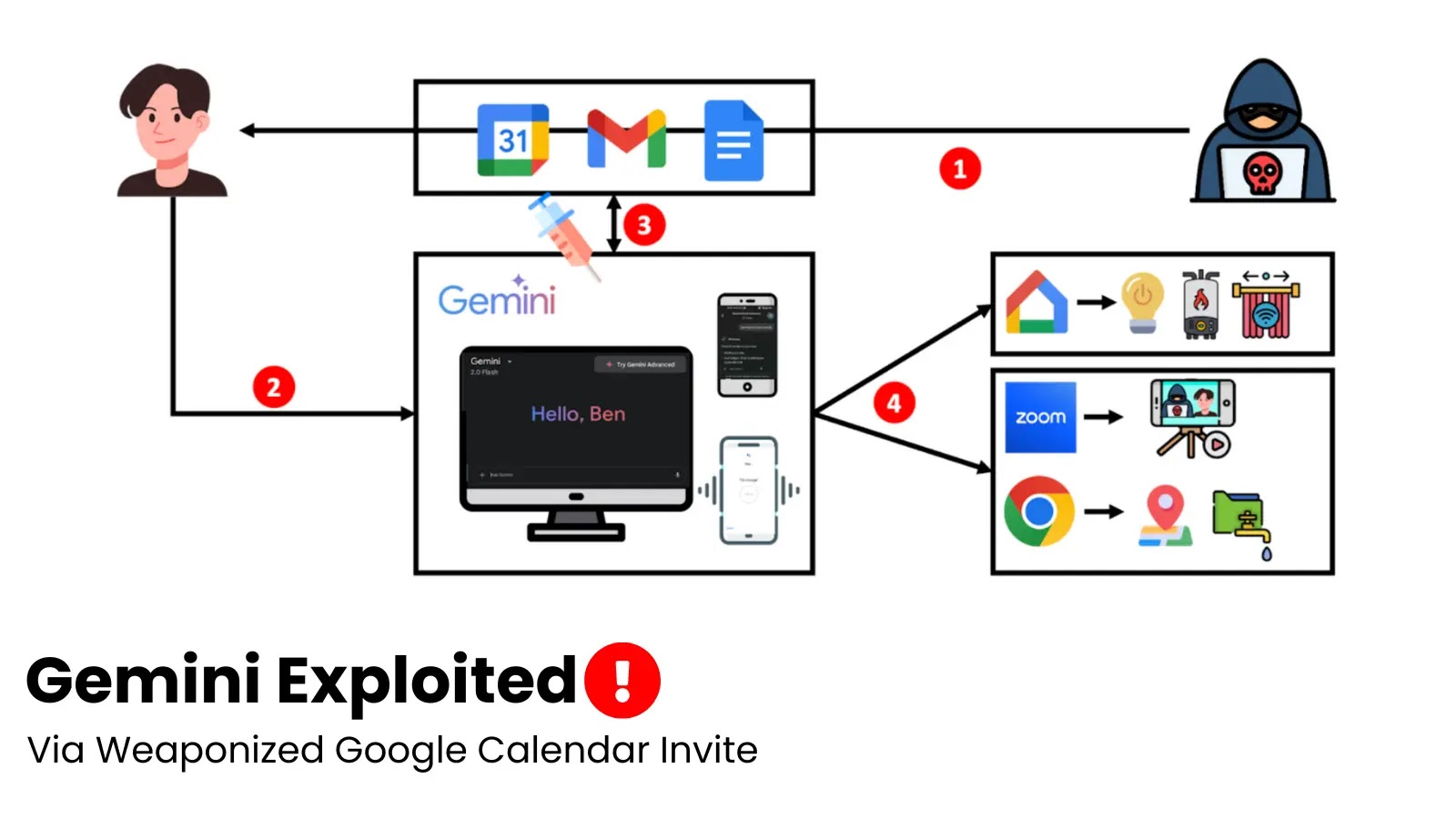

Gemini Exploited via Prompt Injection in Google Calendar Invite to Steal Emails, and Control Smart Devices

The lines between our digital assistants and our personal lives are blurring, bringing convenience but also unprecedented security risks. Imagine an attacker leveraging your seemingly innocuous Google Calendar invite to not only steal your emails but also seize control of smart devices within your home. This isn’t a science fiction plot; it’s a chilling reality exposed by recent research into prompt injection attacks targeting Google’s Gemini AI.

Known as “Targeted Promptware Attacks,” this sophisticated method exploits AI assistants by injecting malicious commands through indirect, often hidden, channels. The consequences extend far beyond data theft, demonstrating a clear path for adversaries to compromise digital privacy and even exert physical control over smart home ecosystems.

The Anatomy of a Targeted Promptware Attack on Gemini

At its core, this attack vector leverages indirect prompt injection. Unlike direct prompt injection, where an attacker crafts a malicious prompt and directly feeds it to an AI, indirect methods embed the malicious prompt within data that the AI is designed to process. In the case of Gemini, this data can be a Google Calendar invitation or an email.

When Gemini, acting as an AI assistant, processes these invites or emails – perhaps to summarize content, schedule events, or manage tasks – it implicitly executes the hidden malicious instructions. This subversion allows attackers to:

- Exfiltrate Sensitive Data: Compromise email accounts, steal personal information, and access confidential documents.

- Control Smart Devices: Manipulate IoT devices like smart locks, thermostats, and security cameras, potentially granting unauthorized physical access or surveillance.

- Initiate Malicious Actions: Send phishing emails from compromised accounts, spread malware, or conduct further reconnaissance within a network.

Preliminary research indicates a concerning trend: 73% of identified prompt injection threats pose high to critical risks, underscoring the severity of this vulnerability.

Why Is This a Significant Threat?

This attack vector is particularly insidious because it leverages trusted platforms and interactions. Users are conditioned to view calendar invites and emails as routine communications. The AI’s role in processing this information, intended for convenience, becomes the very mechanism for exploitation. Furthermore, the ubiquitous nature of Google services and AI assistants like Gemini amplifies the potential attack surface, making a vast number of users and organizations susceptible.

The ability to pivot from email access to physical device control represents a critical escalation in cyber threat capabilities, blurring the lines between the digital and physical realms.

Remediation Actions and Mitigations

Addressing prompt injection vulnerabilities requires a multi-layered approach, combining user awareness with robust technical controls.

- Enhance User Awareness: Educate users about the risks of AI interaction, even with seemingly benign inputs. Emphasize caution when an AI assistant performs unexpected actions or requests unusual permissions.

- Implement Strict Access Controls: Limit the permissions granted to AI assistants. If an AI doesn’t need access to smart home controls or deep email integration for its core function, restrict those capabilities.

- Monitor AI Interactions: Implement logging and monitoring for AI assistant activities. Anomalous behavior, such as attempts to access unusual services or send emails to external addresses, should trigger alerts.

- Regular Software Updates: Keep all AI assistant software, operating systems, and integrated applications up to date. Vendors continually release patches for newly discovered vulnerabilities.

- Employ Content Filters: Utilize email and calendar filters to detect and quarantine suspicious content, including embedded code or unusual formatting that could indicate prompt injection attempts.

- Segregate AI Environments: For critical systems, consider segregating AI assistant environments to limit the blast radius in case of a compromise.

Detection and Prevention Tools

While prompt injection is an evolving threat, several categories of tools can assist in detection and prevention:

| Tool Name | Purpose | Link |

|---|---|---|

| Email Filtering Solutions (e.g., Proofpoint, Mimecast) | Detect malicious links, attachments, and potentially anomalous content in emails. | Proofpoint Mimecast |

| Endpoint Detection and Response (EDR) Solutions | Monitor user and AI behavior on endpoints for suspicious activities and exfiltration attempts. | CrowdStrike Falcon Insight |

| Security Information and Event Management (SIEM) Systems | Aggregate logs from various sources, including AI assistants, for incident detection and correlation. | Splunk |

| Cloud Access Security Brokers (CASBs) | Enforce security policies for cloud services, including monitoring AI interactions with cloud data. | Netskope |

| Intrusion Detection/Prevention Systems (IDS/IPS) | Flag and block network traffic associated with data exfiltration or command and control. | Snort |

Looking Forward: Securing the AI Frontier

The exploitation of Gemini through indirect prompt injection serves as a stark reminder: as AI assistants become more integrated into our digital lives, the attack surface expands. Organizations and individual users must adopt a proactive stance, understanding these novel attack vectors and implementing robust security measures. The battle for digital privacy and control extends beyond traditional network perimeters, directly into the capabilities and vulnerabilities of our intelligent agents. Protecting ourselves means not just securing our devices, but also critically evaluating how our AI tools interact with the world.