New Red Teaming Tool “Red AI Range” Discovers, Analyze, and Mitigate AI Vulnerabilities

Unmasking AI Vulnerabilities: Introducing Red AI Range for Robust Security

As artificial intelligence continues its rapid integration into critical systems, the imperative for robust AI security has never been greater. AI models, like any complex software, are susceptible to unique vulnerabilities that can be exploited, leading to data breaches, system manipulation, and reputational damage. Traditional cybersecurity red teaming approaches often fall short when assessing the nuances of AI-specific threats. This challenge underscores the critical need for specialized tools designed to scrutinize and harden AI systems. Enter Red AI Range (RAR), an innovative open-source AI red teaming platform that is fundamentally transforming how security professionals discover, analyze, and mitigate these emerging threats.

What is Red AI Range (RAR)?

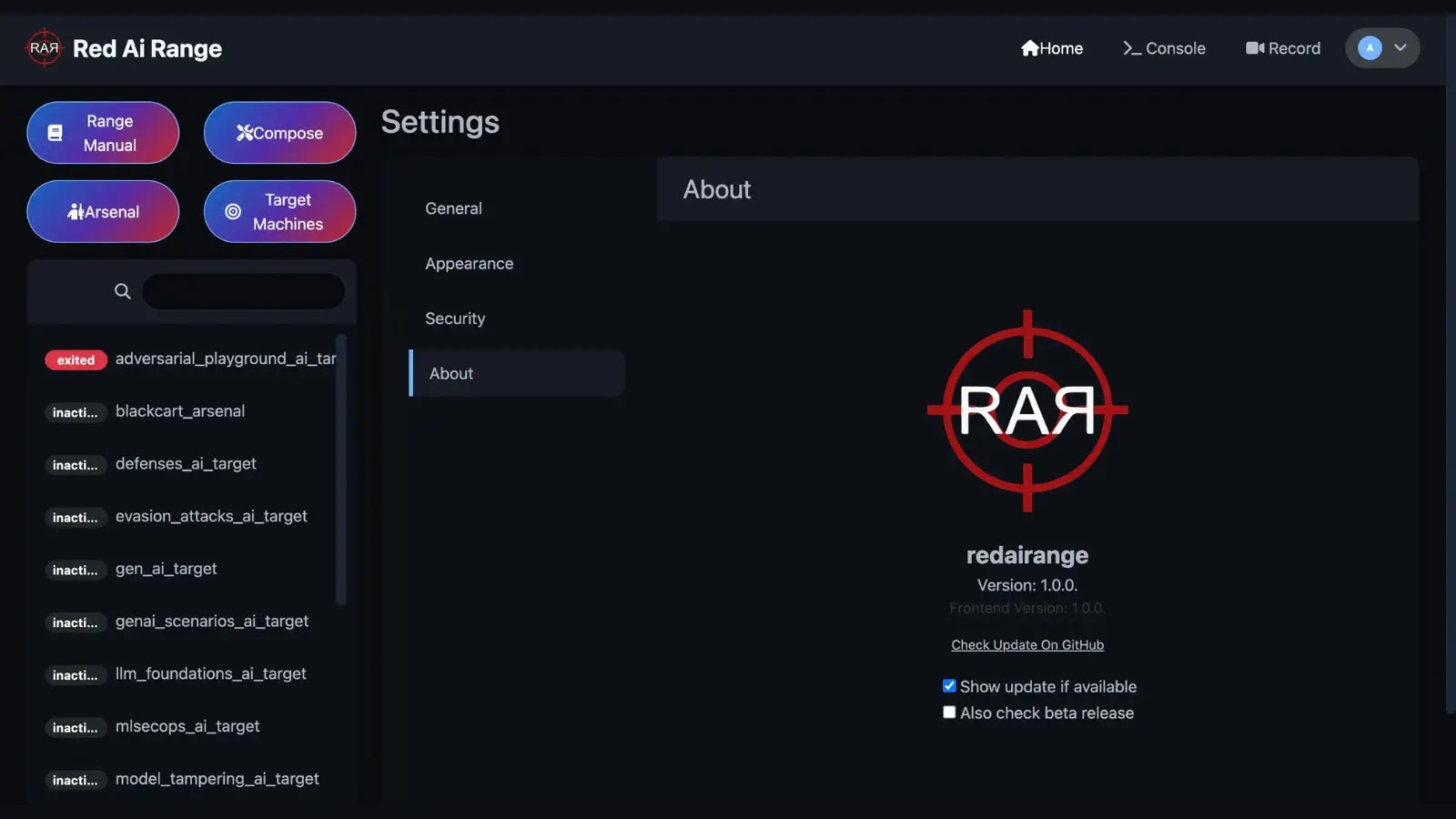

Red AI Range is more than just a tool; it’s a comprehensive platform engineered to simulate realistic attack scenarios against AI systems. By leveraging containerized architectures and automated tooling, RAR streamlines the entire red teaming process for AI. It empowers security analysts to move beyond theoretical understanding and engage with practical, hands-on vulnerability discovery within isolated and controlled environments. This capability is paramount for identifying weaknesses before malicious actors can exploit them in production.

Key Features and Functionality

RAR’s design focuses on efficiency and realism, providing a robust environment for AI security testing. Its core functionalities are built to facilitate a systematic approach to AI red teaming:

- Isolated Testing Environments: RAR utilizes “Arsenal” and “Target” buttons to spin up isolated AI testing containers. This ensures that any tests, even highly aggressive ones, do not impact production systems or other ongoing development. This sandboxed approach is crucial for safe and repeatable vulnerability discovery.

- Attack Recording and Playback: The platform records attack vectors and responses, allowing for detailed investigation, reproduction of vulnerabilities, and the development of effective countermeasures. This feature is invaluable for incident response and post-mortem analysis.

- Automated AI Attack Generation: RAR automates the generation of diverse AI attack types, including adversarial machine learning attacks (e.g., evasion, poisoning), data exfiltration attempts, and model extraction. This automation significantly reduces the manual effort typically associated with comprehensive red teaming.

- Vulnerability Analysis and Reporting: The platform provides tools for analyzing identified vulnerabilities, understanding their impact, and generating actionable reports. This streamlined reporting aids in conveying risks to stakeholders and prioritizing remediation efforts.

- Containerized Architecture: The use of containerization ensures portability, scalability, and ease of deployment. Security teams can quickly set up and tear down testing environments as needed, adapting to different AI models and scenarios.

Why AI-Specific Red Teaming Matters

Traditional red teaming, while effective for general IT infrastructure, often lacks the specialized understanding of AI model intricacies. AI systems introduce new attack surfaces, such as:

- Adversarial Examples: Subtle perturbations to input data that cause a model to misclassify. For instance, an attacker might slightly alter an image to make a facial recognition system incorrectly identify a person (e.g., related to research on CVE-2021-3449 in certain AI models).

- Model Inversion Attacks: Reconstructing sensitive training data from a deployed model’s output. This poses a significant privacy risk, especially for models trained on personal or confidential information.

- Data Poisoning: Injecting malicious data into the training dataset to compromise the model’s integrity and performance. This can lead to biased outputs or enable backdoor access.

- Prompt Injection: Manipulating Large Language Models (LLMs) through crafted input to elicit unintended responses or execute unauthorized actions (e.g., see discussions around vulnerabilities like CVE-2023-35639 in specific LLM applications).

RAR addresses these unique challenges head-on, providing a dedicated framework to expose and understand these vulnerabilities in a controlled setting.

Remediation Actions for AI Vulnerabilities

Identifying AI vulnerabilities through tools like Red AI Range is only half the battle. Effective remediation is crucial for hardening AI systems. Here are key actions:

- Robust Input Validation: Implement stringent checks on all input data to prevent adversarial examples and data poisoning attempts.

- Adversarial Training: Incorporate adversarial examples into the model’s training data to improve its robustness against such attacks.

- Differential Privacy: Apply privacy-preserving techniques during training to minimize the risk of model inversion attacks and protect sensitive data.

- Regular Model Monitoring: Continuously monitor model performance and outputs for anomalies that could indicate an attack or compromise.

- Secure Development Lifecycle (SDLC) for AI: Integrate security considerations throughout the entire AI development process, from data collection and model design to deployment and maintenance.

- Regular Red Teaming and Penetration Testing: Use tools like Red AI Range proactively to identify new vulnerabilities as models evolve and new attack vectors emerge.

Tools for Detecting and Mitigating AI Vulnerabilities

While Red AI Range is a powerful red teaming platform, other tools and frameworks complement its capabilities in detecting and mitigating AI vulnerabilities.

| Tool Name | Purpose | Link |

|---|---|---|

| IBM Adversarial Robustness Toolbox (ART) | A Python library for machine learning security, providing utilities for assessing and defending against adversarial attacks. | GitHub – IBM ART |

| Microsoft Counterfit | An open-source automation framework for assessing the security of AI systems by generating adversarial attacks. | GitHub – Microsoft Counterfit |

| OWASP Top 10 for LLM | Identifies and prioritizes the most critical security risks in Large Language Models (LLMs). | OWASP Top 10 for LLM |

| Weights & Biases | MLOps platform that can help monitor models for performance drifts and anomalies, indicating potential attacks. | Weights & Biases |

Looking Ahead: The Future of AI Security

The introduction of tools like Red AI Range signifies a crucial shift in how organizations approach AI security. As AI systems become more ubiquitous and complex, the need for proactive security measures designed specifically for their unique attack surfaces will only grow. Open-source initiatives like RAR enable broader access to advanced security tools, fostering collaboration and innovation in the AI security community. Adopting such platforms is no longer optional; it is fundamental to building trust and ensuring the responsible deployment of AI technologies.