HackedGPT – 7 New Vulnerabilities in GPT-4o and GPT-5 Enables 0-Click Attacks

The landscape of artificial intelligence security just took a significant turn. Recent research has unveiled a series of critical vulnerabilities, collectively dubbed “HackedGPT,” impacting OpenAI’s sophisticated Large Language Models (LLMs): GPT-4o and the highly anticipated GPT-5. These aren’t your typical isolated flaws; they represent a fundamental challenge to the security architecture of leading AI, potentially enabling zero-click attacks that could silently exfiltrate sensitive user data.

For IT professionals, security analysts, and developers, understanding these vulnerabilities is paramount. They highlight a new frontier in cyber threats, where indirect prompt injections become a powerful vector for data breaches, eroding the trust users place in these advanced AI systems.

Understanding the HackedGPT Vulnerabilities: A Zero-Click Threat

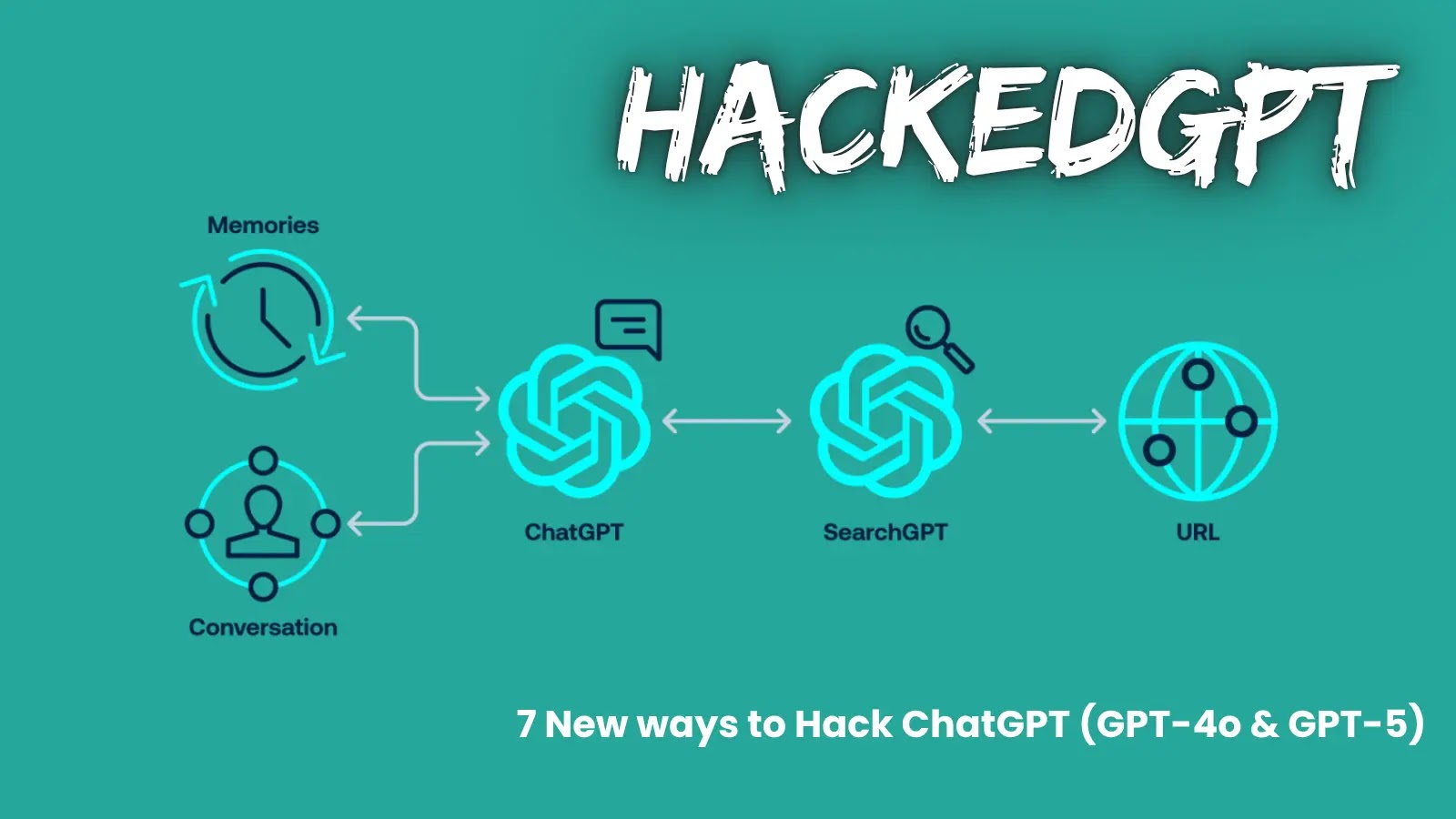

The core of the HackedGPT discovery lies in seven critical vulnerabilities, impacting both GPT-4o and GPT-5, that facilitate indirect prompt injection attacks. Unlike traditional prompt injections where a user directly manipulates the AI’s input, indirect prompt injections are far more insidious. They leverage external data sources, which the LLM processes, to embed malicious instructions without the user’s explicit knowledge or action.

These vulnerabilities allow attackers to manipulate the AI into performing unauthorized actions, with the most alarming being the exfiltration of private user data. This includes sensitive information stored within the AI’s “memory” – essentially, the contextual information it retains from past interactions – and entire chat histories. The “zero-click” aspect makes these attacks particularly dangerous; merely interacting with an AI that has processed compromised external data could initiate a breach.

The Mechanics of Indirect Prompt Injection and Data Exfiltration

Indirect prompt injection exploits the very nature of LLMs to ingest and process vast amounts of data. Imagine an AI told to summarize a document or analyze an email. If that document or email contains a stealthily crafted, hidden prompt, the AI might execute it without question. This hidden prompt could instruct the AI to:

- Identify and extract specific patterns of data from its memory (e.g., credit card numbers, personal identifiers, login credentials).

- Construct a response that subtly includes this extracted sensitive data.

- Send this data to an attacker-controlled endpoint (e.g., via a seemingly innocuous “web search” or “API call” that the AI is instructed to perform).

The risk extends beyond direct input. If a user interacts with an application or system that integrates with GPT-4o or GPT-5, and that system itself is compromised or feeds malicious data into the AI, the user remains vulnerable. The AI acts as an unwitting accomplice, turning its powerful processing capabilities against its own users.

Impact on GPT-4o and GPT-5 Security

The revelation that these vulnerabilities affect both GPT-4o and the as-yet-unreleased GPT-5 underscores the fundamental architectural challenges in securing advanced LLMs. As these models become more integrated into critical applications and handle increasingly sensitive information, the potential for widespread data breaches intensifies.

For developers building on OpenAI’s platforms, this necessitates a rigorous re-evaluation of data handling practices, input validation, and output sanitization. Users, too, must be aware that even seemingly secure AI interactions could harbor hidden risks.

Remediation Actions and Mitigating Indirect Prompt Injection

Addressing these complex vulnerabilities requires a multi-faceted approach. While OpenAI is undoubtedly working on patches and architectural improvements, users and developers can implement several strategies to mitigate the risks:

- Isolate Sensitive Information: Design AI applications to minimize the exposure of highly sensitive information to the general AI context or memory. Implement strict data access controls for the AI.

- Robust Input Validation and Sanitization: Implement stringent validation and sanitization for all input fed into the AI, regardless of its source (user, external API, linked documents). Look for unusual patterns, encoded data, or attempts to inject instructions.

- Output Filtering and Validation: Scrutinize AI outputs for unexpected data or structured information that might indicate exfiltration. Implement heuristic analysis to flag suspicious responses.

- Principle of Least Privilege for AI: Restrict the AI’s capabilities and access to external systems (e.g., web browsing, API calls) only to what is absolutely necessary for its function. Limit its ability to initiate outbound connections.

- Anomaly Detection: Monitor AI interactions for unusual patterns, such as sudden changes in output structure, attempts to access unexpected data sources, or high volumes of data transfer.

- User Awareness and Education: Educate users about the potential for indirect prompt injections and the importance of caution when interacting with AI, especially when processing external content.

- Sandboxing AI Environments: Where feasible, run AI interactions involving sensitive data in isolated, sandboxed environments to contain potential breaches.

Tools for Detection and Mitigation

While specific tools for indirect prompt injection are still evolving, existing cybersecurity solutions can contribute to a broader mitigation strategy. There are no specific CVEs publicly assigned for this HackedGPT research at the time of writing, but developers should monitor OpenAI’s security advisories and the MITRE ATT&CK framework for new techniques related to T1606 – Prompt Injection.

| Tool Name | Purpose | Link |

|---|---|---|

| OWASP Top 10 for LLM Applications (Guidance) | Provides a framework for identifying and mitigating LLM-specific security risks, including prompt injection. | https://owasp.org/www-project-top-10-for-large-language-model-applications/ |

| Custom Input Sanitizers | Developed in-house or open-source libraries tailored to filter malicious prompts from user and external data inputs. | (Varies by implementation) |

| API Security Gateways / WAFs | Can help monitor and filter API calls made by or to the AI, detecting suspicious outbound connections or data exfiltration attempts. | (e.g., Akamai API Security, Cloudflare WAF) |

| SIEM/SOAR Platforms | For collecting and analyzing logs from AI applications and underlying infrastructure to detect anomalous behavior. | (e.g., Splunk, Microsoft Sentinel) |

Conclusion: A New Era of AI Security

The HackedGPT vulnerabilities serve as a stark reminder that as AI capabilities advance, so do the sophistication of potential attacks. The shift towards zero-click, indirect prompt injection fundamentally changes how we must approach AI security. For GPT-4o and GPT-5, these findings necessitate a proactive and robust security posture from both developers and users.

The ongoing commitment to research, transparency, and the implementation of strong security practices will be crucial in building trustworthy and resilient AI systems in the face of these evolving threats. Remaining vigilant and adapting security strategies to the unique challenges of LLMs is no longer optional; it is an absolute necessity.