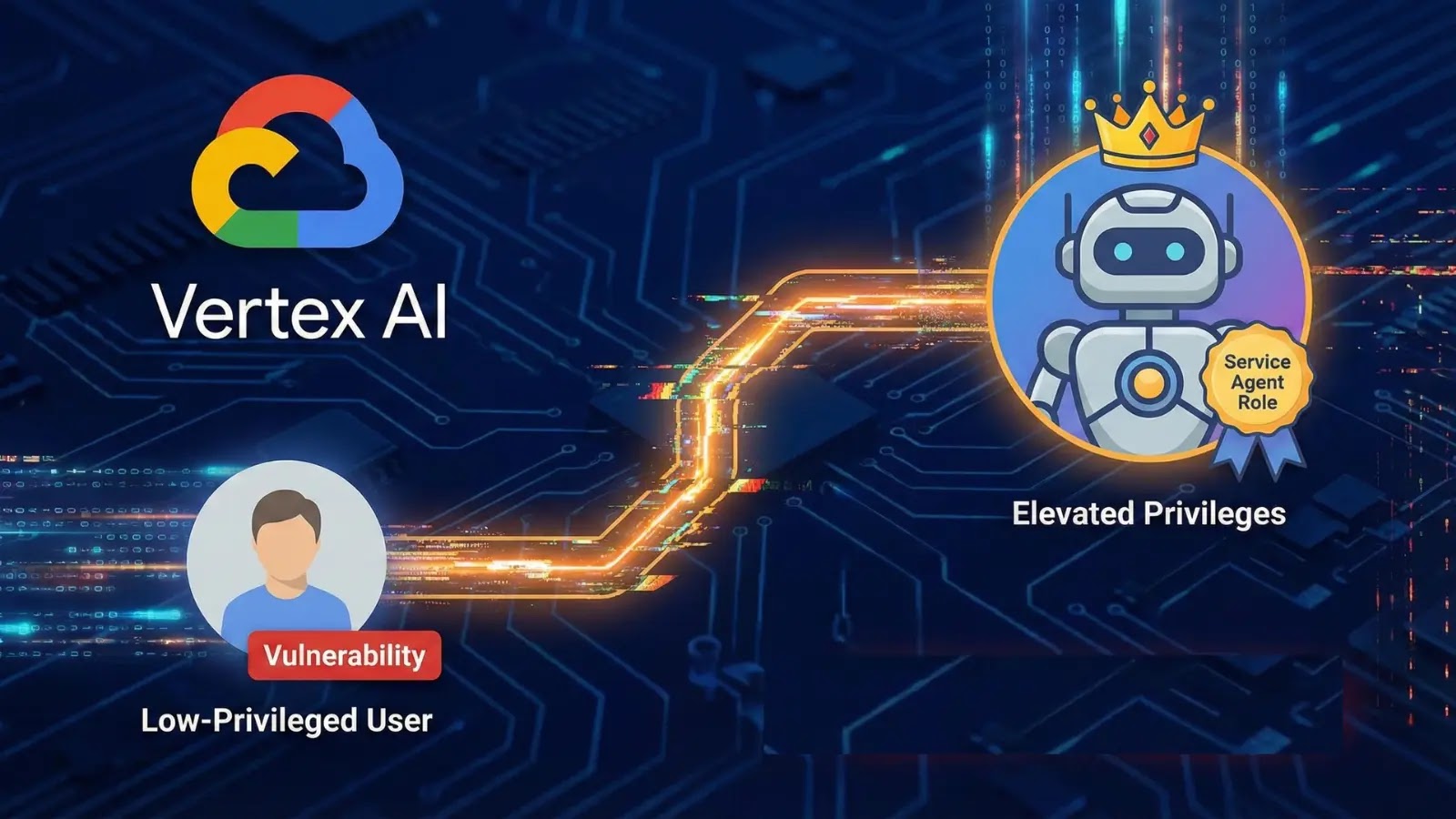

Google’s Vertex AI Vulnerability Enables Low-Privileged Users to Gain Service Agent Roles

The landscape of cloud security is constantly shifting, presenting new challenges even within highly sophisticated platforms. A recent disclosure by XM Cyber researchers has brought to light a significant vulnerability within Google’s Vertex AI, a potent machine learning platform. This vulnerability, stemming from default configurations, allows low-privileged users to escalate their access by hijacking critical Service Agent roles. Understanding such nuanced threats is paramount for maintaining robust data security and operational integrity within cloud environments.

Understanding the Vertex AI Vulnerability

Google’s Vertex AI, a cornerstone for many organizations developing and deploying AI/ML models, has been found to harbor a critical misconfiguration. XM Cyber researchers identified that default settings within Vertex AI allow users with minimal privileges to elevate their access levels. Specifically, this involves the hijacking of Service Agent roles – managed identities that Google Cloud attaches to Vertex AI instances. This effectively grants an attacker elevated permissions they should not possess, posing a direct threat to the confidentiality, integrity, and availability of resources.

Attack Vectors: Agent Engine and Ray on Vertex AI

The researchers pinpointed two primary attack vectors through which this privilege escalation can occur:

- Vertex AI Agent Engine: This component, central to the execution of AI tasks, can be manipulated due to its default configurations. A low-privileged user could exploit this to gain control over Service Agents associated with the engine.

- Ray on Vertex AI: Ray, an open-source framework for distributed computing, when integrated with Vertex AI, also presented an avenue for exploitation. Similar to the Agent Engine, its default setup allowed for the hijacking of Service Agent roles, granting unauthorized elevated access.

Interestingly, Google has reportedly deemed these identified behaviors as “working as intended.” While this stance might reflect the intended functionality for certain use cases, it underscores a critical security blind spot where default configurations can inadvertently create pathways for privilege escalation.

The Impact of Service Agent Hijacking

Service Agents are powerful managed identities, acting on behalf of Google Cloud services to interact with other resources. When a low-privileged user successfully hijacks a Service Agent role, they effectively inherit the comprehensive permissions associated with that agent. This can lead to:

- Unauthorized access to sensitive data and applications.

- Deployment of malicious code or models.

- Destruction or manipulation of cloud resources.

- Complete compromise of the Vertex AI environment, and potentially, interconnected Google Cloud services.

The ability of an attacker to move laterally and escalate privileges within a cloud environment is often the precursor to more significant breaches, making this particular vulnerability a serious concern for any organization leveraging Vertex AI.

Remediation Actions and Best Practices

Given Google’s “working as intended” assessment, organizations must take proactive steps to mitigate this inherent risk. While a specific CVE ID hasn’t been publicly assigned to this “intended” behavior, the principles of least privilege and diligent configuration remain paramount.

Configuration Review and Principle of Least Privilege

- Granular Role Assignment: Review all IAM policies and roles associated with Vertex AI projects and resources. Ensure that users and service accounts are granted only the absolute minimum permissions required for their tasks. Avoid broad roles like “Owner” or “Editor” for operational accounts.

- Custom Roles: Where possible, create custom IAM roles that precisely define necessary permissions, rather than relying on predefined roles that might include excessive privileges.

- Service Account Management: Audit all Service Accounts. Understand their purpose, who has permission to use them, and what resources they can access. Rotate service account keys regularly.

- Dedicated Projects: Isolate sensitive AI/ML workloads into dedicated Google Cloud projects with strict IAM policies. This limits the blast radius of any potential compromise.

Monitoring and Auditing

- Cloud Audit Logs: Regularly review Google Cloud Audit Logs for suspicious activity related to Vertex AI and Service Agent usage. Look for unusual API calls, privilege changes, or resource modifications.

- Security Command Center: Utilize Google Cloud Security Command Center for continuous monitoring and threat detection across your Google Cloud environment, including Vertex AI.

- Alerting: Configure robust alerting for anomaly detection, such as unusual resource creation, permission changes, or access from unexpected locations.

Secure Development Practices

- Code Review: Implement rigorous code reviews for all AI/ML models and applications deployed on Vertex AI to prevent the introduction of vulnerabilities.

- Dependency Scanning: Regularly scan third-party libraries and dependencies used in AI/ML pipelines for known vulnerabilities.

Tools for Detection and Mitigation

While this vulnerability stems from default configurations rather than a traditional software flaw, various tools can aid in continuous security posture management for Google Cloud environments.

| Tool Name | Purpose | Link |

|---|---|---|

| Google Cloud Security Command Center | Comprehensive security management and risk reporting for Google Cloud resources. | https://cloud.google.com/security-command-center |

| Google Cloud IAM Recommender | Automated recommendations for least-privilege IAM roles. | https://cloud.google.com/iam/docs/iam-recommender |

| Forseti Security | Open-source security toolkit for Google Cloud, including policy enforcement and inventory. | https://forsetisecurity.org/ |

| Cloud Logging / Cloud Monitoring | Centralized logging and monitoring for all Google Cloud services, essential for anomaly detection. | https://cloud.google.com/logging |

Conclusion

The revelation of low-privileged users gaining Service Agent roles within Google’s Vertex AI due to default configurations highlights a critical area of concern for cloud security professionals. While Google views this as intended behavior, organizations must actively implement stringent IAM policies, adhere to the principle of least privilege, and invest in robust monitoring. Proactive security posture management, combined with a deep understanding of cloud service intricacies, is the most effective defense against such sophisticated threats and ensures the integrity of AI/ML workloads.