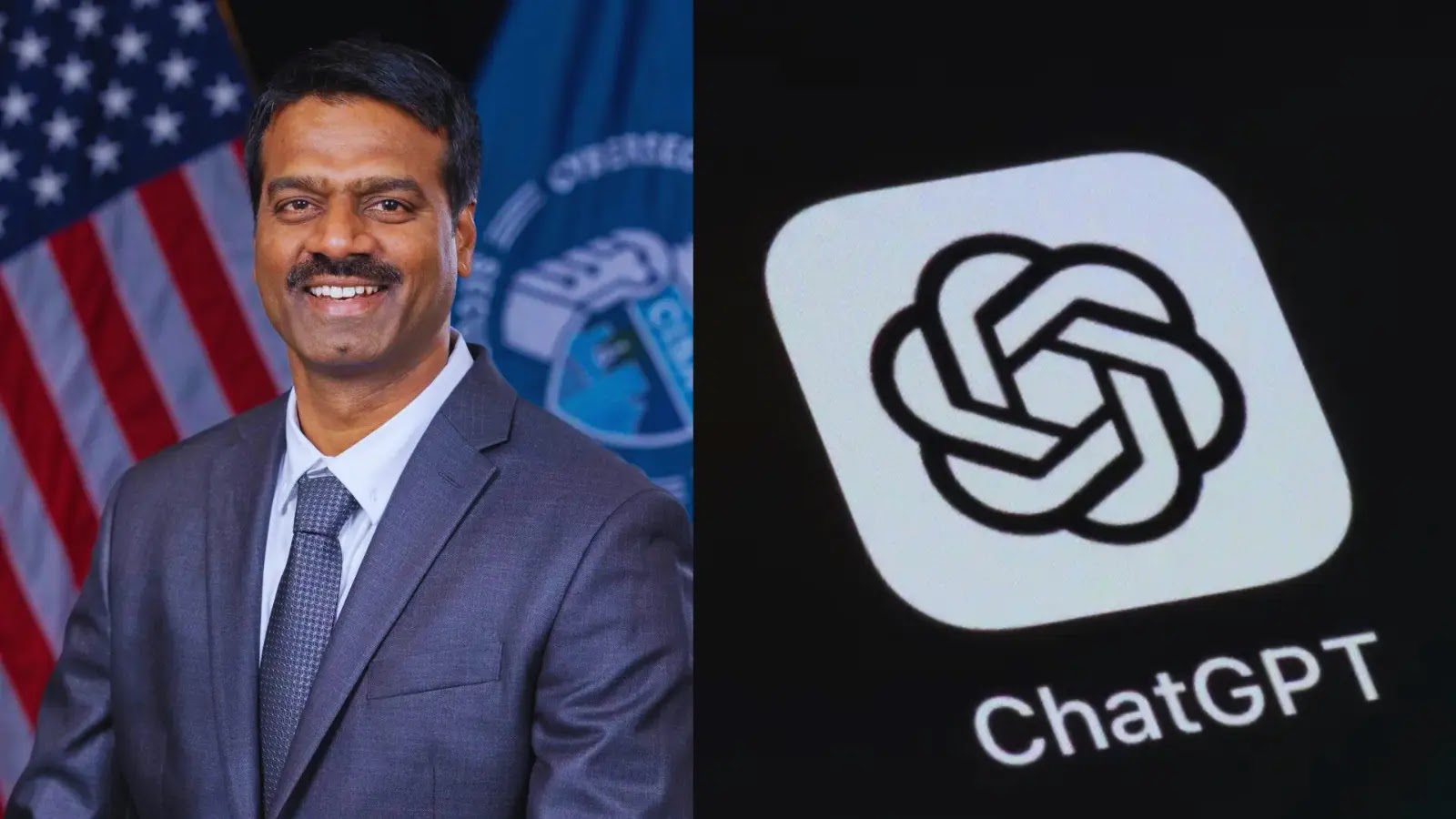

CISA Chief Uploaded Sensitive Documents into Public ChatGPT

The integrity of classified information within government agencies is paramount. So, when news breaks that a top cybersecurity official inadvertently exposed sensitive documents, it sends ripples through the cybersecurity community. This incident, involving the acting director of the Cybersecurity and Infrastructure Security Agency (CISA) and the public version of ChatGPT, highlights a critical intersection of human error, emerging AI technologies, and the ever-present challenge of data security within federal networks.

The CISA ChatGPT Incident: A Closer Look

Last summer, Madhu Gottumukkala, CISA’s interim head, uploaded confidential contracting documents into the public ChatGPT.

These documents, explicitly marked “for official use only,” triggered multiple automated security alerts within federal networks. These alerts are specifically designed to prevent data exfiltration, the unauthorized transfer of data out of an organization. Four Department of Homeland Security (DHS) officials confirmed this breach to Politico, underscoring the severity of the lapse.

This event is not attributed to a specific software vulnerability. Rather, it falls under the umbrella of human operational security (OpSec) failure, specifically unauthorized data sharing. The incident serves as a stark reminder that even within organizations dedicated to cybersecurity, human factors remain a significant vulnerability.

Understanding Data Exfiltration Risks with Public AI

Publicly accessible AI platforms like ChatGPT are powerful tools, but they require careful consideration regarding data privacy and security. When users input data, especially sensitive information, they are often unknowingly contributing to the AI’s training data. This means that once sensitive information is uploaded, it could potentially become part of the large language model and, in some scenarios, be inadvertently regurgitated or inferred by the AI in response to other user queries.

- Data Retention and Training: Most public AI models retain inputs to improve their performance. This retention policy can be a direct conflict with data governance regulations and privacy mandates for sensitive government or corporate data.

- Lack of Control: Organizations have no control over how public AI platforms handle or secure the data once it’s submitted. This lack of control presents an unacceptable risk for classified or proprietary information.

- Automated Alerts and Detection: The fact that automated security alerts were triggered in the CISA incident demonstrates the effectiveness of existing data loss prevention (DLP) systems. These systems are crucial for identifying and blocking attempts to move sensitive data outside approved channels, irrespective of the method.

The Peril of “For Official Use Only” Data

Documents marked “for official use only” (FOUO) are subject to specific handling guidelines to protect sensitive but unclassified information. These guidelines exist to prevent unauthorized disclosure that could compromise government operations, privacy, or security. Uploading such documents to a public platform directly violates these protocols and introduces significant risks, including:

- Compromise of Sensitive Operations: Details within contracting documents, even if unclassified, could reveal operational strategies, vendor information, or budget allocations that adversaries could exploit.

- Reputational Damage: Incidents of sensitive data exposure erode public trust and can tarnish the reputation of the involved agency and its leadership.

- Legal and Compliance Ramifications: Breaches of FOUO information can lead to internal investigations, disciplinary actions, and potentially broader legal or compliance issues, depending on the nature of the data.

Remediation Actions and Best Practices

Preventing similar incidents requires a multi-faceted approach combining policy, technology, and continuous education.

- Comprehensive AI Usage Policies: Agencies must develop and enforce clear, comprehensive policies regarding the use of public and private AI tools. These policies should explicitly state what types of data can and cannot be entered into these systems.

- Enhanced Data Loss Prevention (DLP) Systems: Continuously review and update DLP rules to identify and block sensitive data from being uploaded to unauthorized external services, including known public AI platforms.

- Mandatory Security Awareness Training: Regular and engaging training sessions are crucial. These sessions should cover:

- The risks associated with public AI tools.

- Proper handling of all sensitive data classifications (e.g., FOUO, Controlled Unclassified Information – CUI, classified).

- The importance of personal responsibility in data protection.

- Secure Internal AI Solutions: For federal entities, exploring and implementing air-gapped or privately hosted AI solutions is critical for processing sensitive internal data without exposure to public networks.

- Incident Response Plan Review: Ensure that incident response plans are robust enough to address data exfiltration incidents involving emerging technologies like AI, including immediate containment, assessment, and notification procedures.

Looking Ahead: Securing AI Integration

The CISA incident underscores the delicate balance between leveraging advanced AI tools for productivity and ensuring ironclad data security. While AI offers immense potential to enhance government operations, its integration must be guided by robust security protocols and a deep understanding of its inherent risks. Agencies must move proactively to educate their workforce, implement stringent technical controls, and cultivate a culture where data security is a shared responsibility at all levels.