Augustus – Open-source LLM Vulnerability Scanner With 210+ Attacks Across 28 LLM Providers

The rapid adoption of Large Language Models (LLMs) has introduced a new frontier for cybersecurity, presenting novel attack surfaces and complex vulnerabilities. Securing these sophisticated AI systems is no longer an academic exercise; it’s a critical operational imperative for any organization leveraging LLM technology. Bridging the gap between cutting-edge research and practical defense, a new open-source vulnerability scanner named Augustus has emerged as a significant development.

Introducing Augustus: The Open-Source LLM Vulnerability Scanner

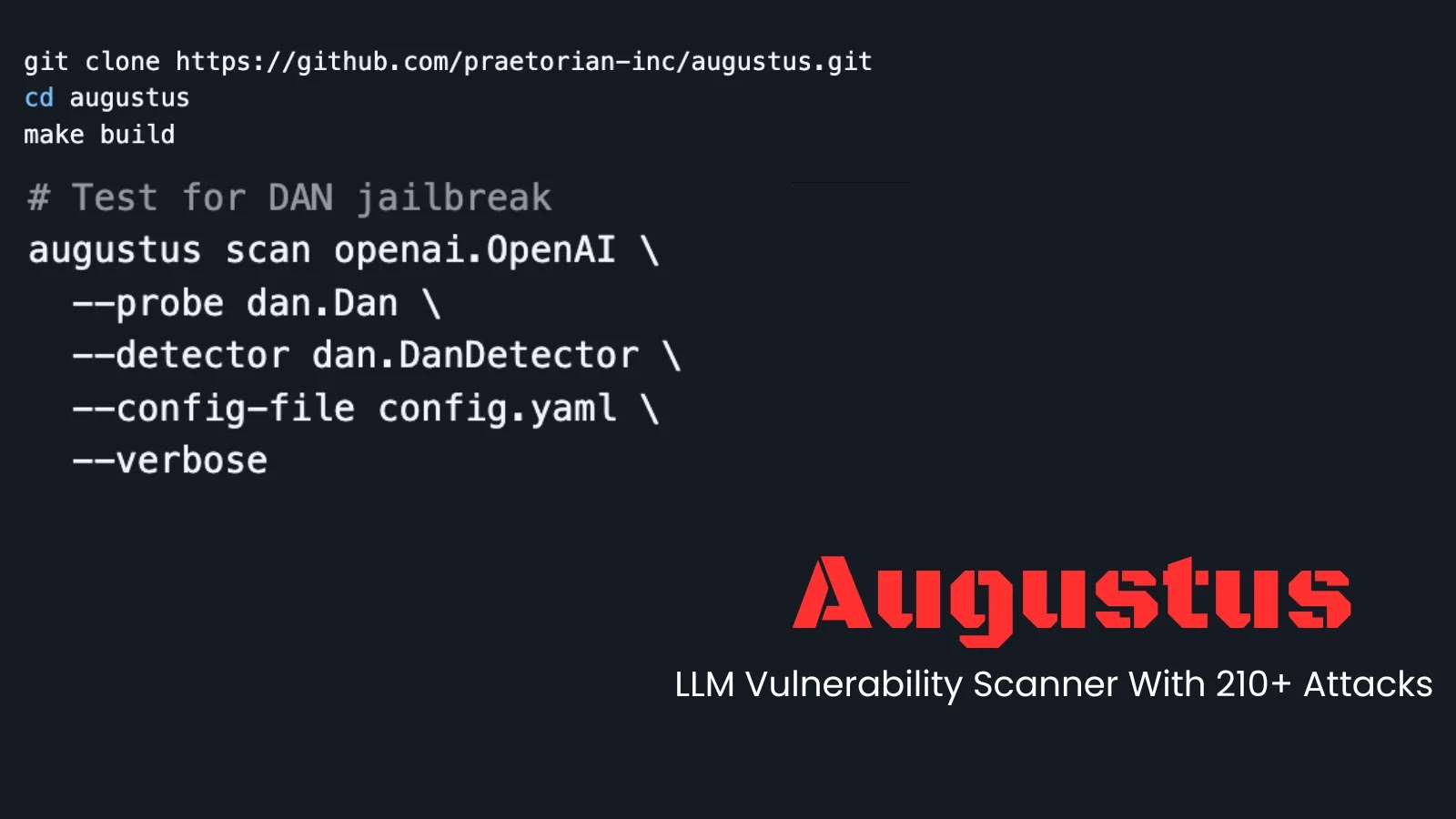

Developed by Praetorian, Augustus is engineered to provide robust security testing for LLMs. This specialized tool directly addresses the unique challenges in safeguarding LLM deployments. Instead of relying on disparate academic scripts or manual testing, Augustus consolidates advanced adversarial techniques into a single, user-friendly binary. Its design philosophy centers on making sophisticated LLM vulnerability scanning accessible and efficient for security teams.

Comprehensive Attack Surface Coverage

What sets Augustus apart is its expansive capability. The scanner currently supports over 210 distinct adversarial attacks, demonstrating a deep understanding of the diverse threat landscape targeting LLMs. These attacks go beyond generic input manipulation, encompassing a wide array of techniques designed to exploit the specific characteristics of language models.

- Prompt Injection: Subtly manipulating prompts to force unintended behavior or extract sensitive information.

- Data Exfiltration: Tricking the LLM into revealing confidential data it may have been trained on or has access to.

- Jailbreaking: Bypassing safety filters and ethical guidelines embedded within the LLM.

- Model Denial of Service: Overloading or confusing the model to impair its functionality.

- Misinformation Generation: Coercing the LLM to produce false or misleading content.

Broad Provider Compatibility

Augustus isn’t limited to a single LLM provider or architecture. Its design emphasizes broad compatibility, capable of launching attacks against 28 different LLM providers. This broad support means that organizations utilizing various LLM platforms, from commercial APIs to self-hosted open-source models, can leverage Augustus for consistent and comprehensive security assessments. This versatility is crucial in an ecosystem where organizations often employ a mix of LLM solutions.

Addressing the LLM Security Gap

The development of Augustus highlights a critical need within the cybersecurity community. While traditional vulnerability scanners excel at network, application, and infrastructure security, they are ill-equipped to identify and mitigate threats unique to LLMs. Augustus fills this void by providing a specialized tool that understands the nuances of LLM interactions and potential exploitation vectors. It transforms abstract research into a practical security solution, enabling proactive identification and remediation of weaknesses before they are exploited in the wild.

Remediation Actions

Identifying vulnerabilities is only the first step. Effective remediation is paramount to securing LLM deployments. Here are key actions organizations should take based on findings from tools like Augustus:

- Implement Robust Input Validation and Sanitization: Beyond basic checks, employ LLM-specific validation to detect and neutralize malicious prompt injection attempts. Consider techniques like input normalization and AI-based anomaly detection.

- Employ Output Filtering and Moderation: Critically analyze LLM outputs before they are presented to users. Implement content filters to prevent the generation of harmful, biased, or exfiltrated information.

- Regularly Update and Patch LLM Models: Just like any software, LLMs and their underlying frameworks receive security updates. Stay informed about security advisories related to your LLM providers.

- Utilize Principle of Least Privilege: Ensure that LLMs and their associated services only have access to the data and functionalities absolutely necessary for their operation.

- Adversarial Retraining and Fine-tuning: For proprietary or fine-tuned models, consider adversarial retraining to make the model more resilient to known attack types.

- Monitor LLM Interactions: Implement comprehensive logging and monitoring of LLM inputs, outputs, and internal states to detect anomalous behavior Indicative of an attack.

- Stay Informed on Latest Threats: The LLM threat landscape is rapidly evolving. Regularly consult resources like the CVE database for newly disclosed vulnerabilities impacting LLMs. While specific CVEs for generic LLM attacks are still emerging, frameworks like OWASP Top 10 for LLMs provide a good starting point.

Detection and Mitigation Tools

While Augustus serves as a powerful scanning tool, a multi-layered approach to LLM security is essential. Here are some categories of tools that complement Augustus:

| Tool Category | Purpose | Examples/Approach |

|---|---|---|

| Input Sanitization Libraries | Cleanse and validate user inputs before they reach the LLM to prevent prompt injection. | Open-source libraries for text normalization, regex filtering, and content moderation. |

| Output Moderation APIs | Filter and assess LLM-generated content for harmful, biased, or inappropriate outputs. | Commercial APIs (e.g., from OpenAI, Google Cloud) or open-source content filters. |

| LLM Firewalls/Proxies | Act as an intermediary layer to monitor and control interactions with LLMs, enforcing security policies. | Specialized API gateways designed for LLM traffic. |

| Adversarial Training Frameworks | Improve LLM robustness by training models on adversarial examples. | TensorFlow Adversarial Validation, PyTorch libraries for adversarial training. |

| Monitoring & Logging Solutions | Collect and analyze LLM interaction data to detect anomalies and potential attacks. | SIEM systems integrated with LLM API logs or internal model telemetry. |

Conclusion

Augustus represents a significant step forward in securing the evolving landscape of AI. By offering an open-source, production-grade vulnerability scanner with extensive attack capabilities and broad provider support, Praetorian has equipped cybersecurity professionals with a vital tool. As LLMs become more integrated into critical systems, comprehensive security testing using solutions like Augustus will be indispensable for building resilient and trustworthy AI applications.