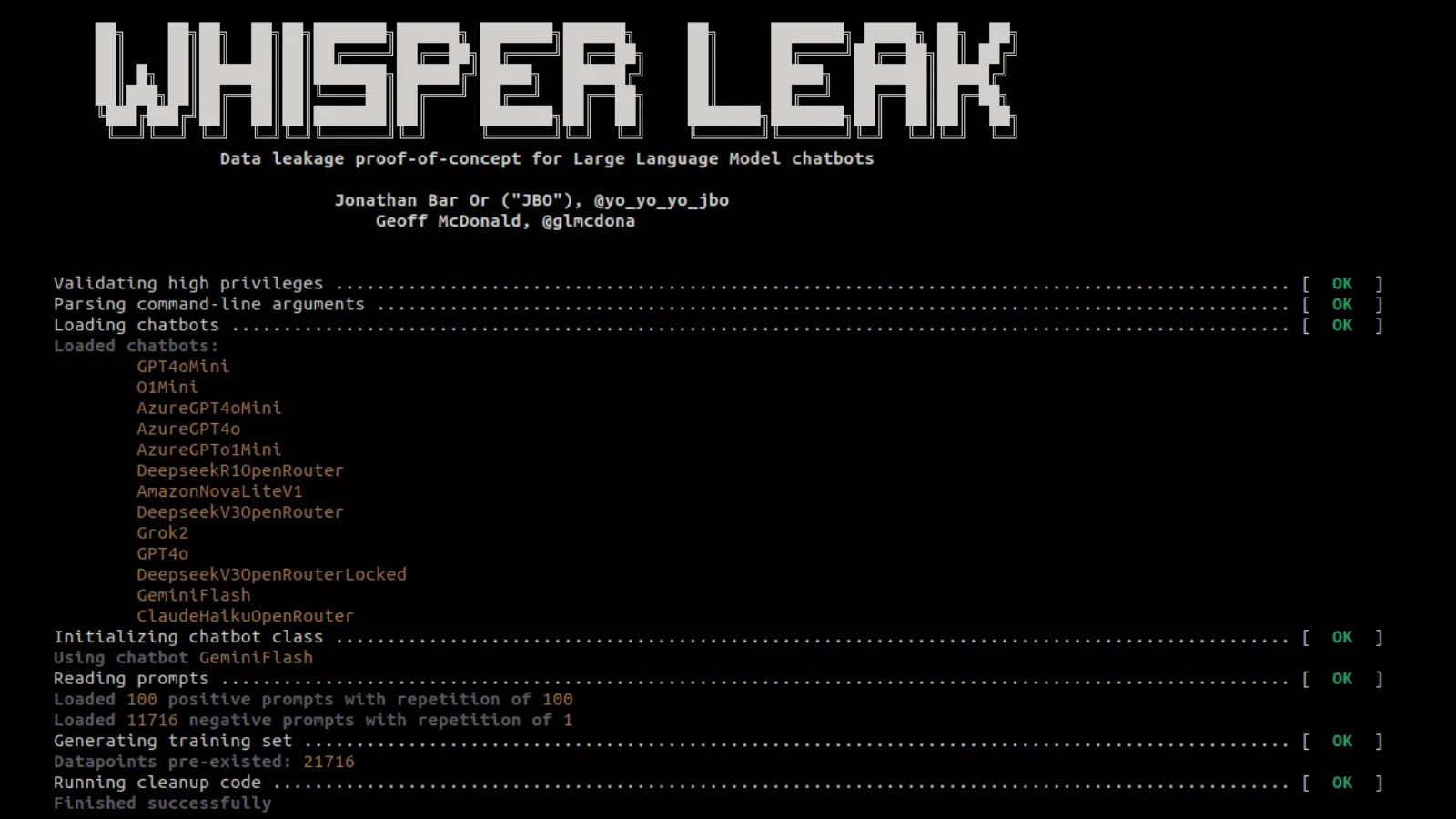

New Whisper Leak Toolkit Exposes User Prompts to Popular AI Agents within Encrypted Traffic

Whisper Leak Toolkit: Exposing AI Prompts Through Encrypted Traffic

In an era where artificial intelligence is increasingly integrated into daily life, the privacy of our interactions with AI agents is paramount. A significant discovery, dubbed “Whisper Leak,” reveals a sophisticated side-channel attack that can compromise this privacy. This vulnerability allows malicious actors to infer the content of user prompts to popular AI chatbots, even when communications are protected by end-to-end encryption. The implications for individuals, enterprises, and national security are profound, underscoring a critical weakness in how we perceive encrypted data.

Understanding the Whisper Leak Vulnerability

The Whisper Leak vulnerability exploits a fundamental characteristic of network communication: the size and timing of data packets. While end-to-end encryption scrambles the actual content of messages, it doesn’t obscure metadata such as packet length or the intervals at which they are sent. Researchers have demonstrated that by meticulously analyzing these patterns, eavesdroppers can reconstruct sensitive details about user prompts to AI agents.

This side-channel attack doesn’t break the encryption itself. Instead, it leverages observable network characteristics that correlate with the underlying data structure of AI model inputs and outputs. For instance, longer or more complex prompts often result in larger or more numerous packets. By building a profile of typical AI interactions, an attacker can then match observed network traffic patterns to potential prompt content with a surprising degree of accuracy.

The threat actors capable of deploying such an attack range from nation-state intelligence agencies with advanced resources to internet service providers (ISPs) or even sophisticated Wi-Fi snoopers operating in public spaces. Their ability to infer sensitive prompt details poses a significant risk for intellectual property, personal data, and confidential communications.

Impact on AI Privacy and Security

The Whisper Leak toolkit exposes a critical privacy gap for users of AI chatbots. Consider a scenario where a user discusses proprietary business strategies, sensitive medical information, or even personal legal matters with an AI agent for drafting assistance or analysis. While the conversation appears encrypted, the Whisper Leak attack could allow an adversary to infer the general topic, key entities, or even specific phrases used in the prompt.

- Corporate Espionage: Competitors or state-sponsored actors could infer confidential R&D, merger plans, or financial strategies discussed with AI tools.

- Individual Privacy Breaches: Sensitive personal health information, financial details, or legal consultations could be compromised, leading to identity theft or blackmail.

- National Security Risks: Intelligence gathering by adversaries could be significantly enhanced by inferring classified information discussed with AI systems by government employees.

- Loss of Trust: The perceived security of encrypted communications with AI agents is undermined, leading to a decline in user confidence and adoption of these powerful tools.

Remediation Actions for Users and Developers

Addressing the Whisper Leak vulnerability requires a multi-faceted approach involving both user-side precautions and developer-side mitigations. There is no specific CVE associated with Whisper Leak at this time, as it’s a technique rather than a software flaw, but its impact is analogous to a critical vulnerability.

For Users:

- Assume Compromise: Treat all interactions with AI agents, even those supposedly encrypted, as potentially susceptible to metadata analysis. Avoid discussing highly sensitive or proprietary information.

- Vary Prompt Length and Complexity: While difficult to implement consistently, conscious efforts to vary prompt structures and lengths might introduce noise to network traffic analysis.

- Utilize VPNs (with Caution): A VPN can obfuscate your direct traffic patterns from your ISP or local Wi-Fi snoopers, but the VPN provider itself could potentially perform similar analyses. Choose reputable VPN providers.

- Stay Informed: Keep abreast of new security advisories and best practices for interacting with AI systems.

For Developers and AI Service Providers:

- Traffic Padding and Obfuscation: Implement techniques to add random, non-meaningful data to packets and vary transmission timings. This can generate “noise” that makes side-channel analysis significantly harder.

- Protocol-Level Protections: Explore network protocols that inherently resist timing and size-based inference attacks, such as those used in anonymity networks (e.g., Tor, though not without its own complexities).

- Differential Privacy Techniques: Apply differential privacy mechanisms to the input and output streams of AI models to mask patterns that could be inferred from network traffic.

- Educate Users: Clearly communicate the inherent privacy limitations and risks associated with AI interactions, even under encryption.

- Continuous Monitoring and Research: Invest in ongoing research to detect and mitigate new side-channel attacks targeting AI communications.

Tools for Network Anomaly Detection

While direct mitigation for Whisper Leak is complex, monitoring network traffic for anomalous patterns can be a part of a broader security strategy.

| Tool Name | Purpose | Link |

|---|---|---|

| Wireshark | Network protocol analyzer for deep packet inspection and traffic pattern analysis. | https://www.wireshark.org/ |

| Snort | Open-source intrusion detection system (IDS) capable of real-time traffic analysis and packet logging. | https://www.snort.org/ |

| Zeek (formerly Bro) | Powerful network security monitor that performs comprehensive traffic analysis and generates rich logs. | https://zeek.org/ |

Conclusion

The Whisper Leak toolkit highlights a sophisticated and concerning evolution in side-channel attacks, directly targeting the privacy of interactions with popular AI agents. While end-to-end encryption remains a cornerstone of digital security, its effectiveness in preventing metadata inference from network traffic patterns is now under scrutiny. This discovery demands immediate attention from both AI service providers and users. By understanding the mechanics of such attacks and implementing strategic remediations—from traffic obfuscation to conscious user behavior—we can work towards safeguarding the sensitive prompts that fuel the AI revolution, ensuring trust and privacy in this increasingly intelligent landscape.