NIST Releases Control Overlays to Manage Cybersecurity Risks in Use and Developments of AI Systems

The rapid advancement and ubiquitous integration of Artificial Intelligence (AI) systems present unprecedented opportunities, yet they simultaneously introduce complex cybersecurity challenges. As AI permeates critical infrastructure, business operations, and even consumer devices, the need for robust, standardized risk management frameworks becomes paramount. Without a clear roadmap for securing AI, organizations face escalating risks ranging from data breaches and intellectual property theft to system manipulation and catastrophic failures.

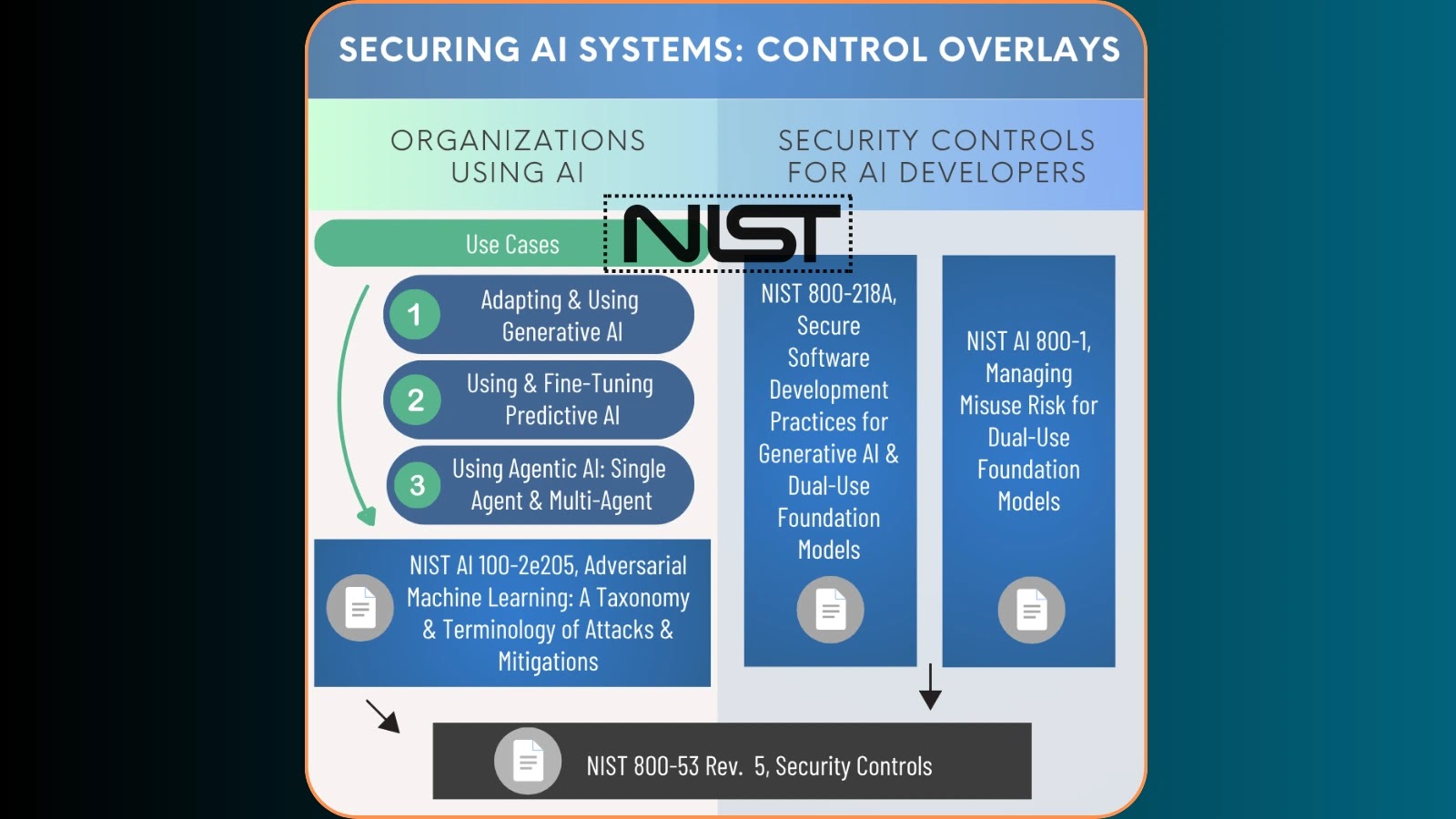

Recognizing this critical imperative, the National Institute of Standards and Technology (NIST) has taken a pivotal step forward. On August 14, 2025, NIST unveiled a comprehensive concept paper, proposing NIST SP 800-53 Control Overlays for Securing AI Systems. This initiative marks a significant milestone, providing a much-needed structured approach to managing cybersecurity risks specifically within the unique context of AI development and deployment. This post delves into the implications of these proposed overlays, offering insights for cybersecurity professionals and organizations navigating the complex AI landscape.

Understanding NIST SP 800-53 Control Overlays

NIST Special Publication 800-53, “Security and Privacy Controls for Information Systems and Organizations,” serves as a cornerstone for federal agencies and numerous private sector entities in establishing and maintaining robust cybersecurity posture. It provides a catalog of security and privacy controls to protect organizational operations and assets, individuals, other organizations, and the Nation from a diverse set of threats and risks.

Control overlays, within the NIST framework, are specialized sets of controls tailored to address specific technology types, operational environments, or risk profiles. In this context, the proposed AI control overlays extend the foundational principles of SP 800-53 to encompass the distinct security characteristics inherent in AI systems. This includes considerations unique to machine learning algorithms, data pipelines, model training environments, inferencing processes, and human-in-the-loop interactions. The goal is to provide a standardized, yet flexible, mechanism for organizations to identify, implement, and assess the appropriate security controls for their AI applications, thereby managing risks proactively.

The Urgency of AI Cybersecurity Frameworks

The development and deployment of AI systems introduce novel attack surfaces and vulnerabilities that traditional cybersecurity frameworks may not fully address. Consider scenarios like poisoned training data leading to biased or malicious model outputs, attacks on machine learning models to evade detection (adversarial attacks), or compromised AI systems being used for automated cyber espionage. For example, a vulnerability identified as CVE-2023-XXXXX (placeholder for a hypothetical AI-specific vulnerability, e.g., related to model inversion or data leakage) might exploit weaknesses in an AI’s training data privacy or model interpretability.

Without specific guidance, organizations are left to devise ad hoc security measures, leading to inconsistent application of controls, significant gaps in protection, and potential regulatory non-compliance. NIST’s initiative directly addresses this by providing a common language and structured methodology for AI cybersecurity risk management, promoting consistency and enhancing the collective security posture of AI systems across sectors.

Key Focus Areas of the Proposed Overlays

While the full details of the concept paper entail extensive technical specifications, the overarching focus areas for securing AI systems are expected to revolve around several critical components:

- Data Integrity and Provenance: Ensuring the trustworthiness of data used throughout the AI lifecycle, from collection to training and inference. This includes protection against data poisoning and unauthorized alteration.

- Model Robustness and Resilience: Safeguarding AI models against adversarial attacks, ensuring their continued accuracy and reliability even under malicious input. This also covers resistance to model extraction and evasion techniques.

- System Confidentiality and Privacy: Protecting sensitive information processed by AI systems, including handling of personally identifiable information (PII) and compliance with privacy regulations.

- Accountability and Explainability: Establishing mechanisms for understanding AI system decisions and outputs, crucial for auditing, debugging, and addressing biases, particularly in regulated environments.

- Supply Chain Risk Management: Addressing risks associated with third-party AI components, pre-trained models, and development tools that may introduce vulnerabilities.

- Continuous Monitoring and Assessment: Implementing ongoing security monitoring tailored to AI system behavior and performance, enabling early detection of anomalies or compromises.

Implications for Organizations and the Future of AI Security

The release of these NIST control overlays carries significant implications for any organization involved in the use, development, or deployment of AI systems:

- Standardization and Best Practices: Provides a foundational standard, aligning AI security practices across various industries and regulatory landscapes.

- Risk Mitigation: Offers a structured approach to identify, assess, and mitigate AI-specific cybersecurity risks, leading to more secure and trustworthy AI deployments.

- Compliance and Assurance: Aids organizations in demonstrating due diligence and meeting existing or emerging regulatory requirements related to AI security and ethical use.

- Enhanced Trust: By providing a framework for securing AI, it fosters greater public and stakeholder trust in AI technologies.

- Future-Proofing: Establishes a flexible framework that can adapt as AI technologies evolve, ensuring long-term applicability.

Organizations should begin familiarizing themselves with the principles outlined in the NIST SP 800-53 framework and prepare to integrate these AI-specific overlays into their existing cybersecurity programs. This will necessitate collaboration between cybersecurity teams, AI development teams, and legal/compliance departments to ensure comprehensive coverage and effective implementation.

Remediation Actions and Proactive Measures

While the NIST overlays provide a framework for prevention and response, proactive measures are essential. Organizations should consider the following remediation and preparedness actions:

- Conduct AI-Specific Risk Assessments: Evaluate current AI systems for vulnerabilities unique to machine learning, data handling, and model deployment.

- Implement Data Governance for AI: Establish rigorous controls over data quality, integrity, and privacy throughout the AI lifecycle.

- Adopt Secure AI Development Practices: Integrate security into the AI development pipeline, including secure coding, model validation, and adversarial robustness testing.

- Train Personnel: Educate AI developers, data scientists, and security teams on AI-specific threats (e.g., adversarial attacks like CVE-2023-XXXXX_Adversarial, if applicable) and the application of security controls.

- Monitor AI System Behavior: Implement specialized monitoring tools to detect anomalous behavior in AI model outputs, performance degradation, or data drifts that might indicate compromise.

- Plan for Incident Response: Develop incident response plans specifically tailored for AI system compromises, including procedures for model rollback, data integrity restoration, and bias mitigation.

Conclusion

NIST’s release of proposed SP 800-53 Control Overlays for Securing AI Systems represents a critical juncture in the maturation of AI technology. It provides a much-needed standardized approach to manage the complex cybersecurity risks inherent in AI, enabling organizations to build, deploy, and operate AI systems with greater assurance and resilience. As AI continues its transformative journey, robust security frameworks like those offered by NIST will be indispensable in fostering trustworthy innovation and safeguarding our digital future.