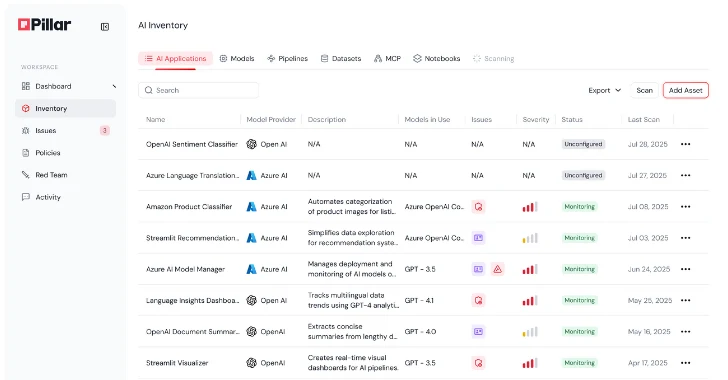

Product Walkthrough: A Look Inside Pillar’s AI Security Platform

The rapid advancement of Artificial Intelligence (AI) has brought unprecedented capabilities to various industries. However, this evolution also introduces novel and complex security challenges. As organizations increasingly integrate AI into their core operations, ensuring the integrity, confidentiality, and availability of these systems becomes paramount. Traditional cybersecurity paradigms, while robust for conventional software, often fall short in addressing the unique vulnerabilities inherent in AI models and their development lifecycles. This necessitates a specialized and holistic approach to AI security, an area where innovative platforms are emerging to provide much-needed trust.

Pillar Security: A New Paradigm for AI Trust

Pillar Security is on the front lines of addressing these critical AI security concerns. Their platform is designed to provide comprehensive coverage across the entire software development and deployment lifecycle, specifically tailored to ensure trust in AI systems. Unlike generalized security solutions, Pillar Security’s approach is purpose-built to understand and mitigate the unique threats that target AI, from model poisoning and adversarial attacks to data integrity compromises and privacy breaches.

Holistic Approach to AI Threat Detection

A core strength of Pillar Security’s offering lies in its holistic approach. This isn’t merely about scanning for known vulnerabilities; it’s about establishing new methodologies for detecting subtle and sophisticated AI threats. The platform extends its reach across every stage, ensuring that security considerations are embedded from the initial stages of development through to ongoing deployment and monitoring. This proactive stance is crucial for identifying emerging threats that might bypass conventional security controls.

The platform introduces novel ways of detecting AI threats, moving beyond signature-based detection to incorporate behavioral analysis and anomaly detection specifically tuned for AI models and their interactions. This includes monitoring for:

- Data Poisoning: Malicious manipulation of training data to compromise the AI model’s integrity or introduce backdoors. This can lead to erroneous outputs or even complete model failure.

- Adversarial Attacks: Subtly crafted inputs designed to mislead an AI model, causing it to make incorrect classifications or predictions. These attacks are often imperceptible to humans but highly effective against AI.

- Model Evasion: Techniques that allow malicious actors to bypass AI-based detection systems by altering their attack vectors.

- Exfiltration of Intellectual Property: Protecting proprietary AI models and their underlying data from unauthorized access and theft.

- Integrity Compromises: Ensuring that the AI model operates as intended and has not been tampered with post-training.

Coverage Across the AI Lifecycle

Pillar Security emphasizes coverage throughout the entire AI software development and deployment lifecycle. This means rather than being a post-deployment bolted-on solution, security is integrated at every phase:

- Development Phase: Ensuring the security of training data, model architectures, and development environments. This includes identifying potential biases or vulnerabilities introduced during model creation.

- Testing and Validation: Rigorously testing AI models against known adversarial techniques and identifying potential weaknesses before deployment.

- Deployment and Operations: Continuous monitoring of deployed AI systems for anomalous behavior, performance degradation indicative of an attack, or unauthorized access attempts. This also involves securing the infrastructure hosting the AI models.

Remediation Actions

While the specifics of Pillar Security’s remediation capabilities were not detailed in the source, a robust AI security platform typically offers the following types of remediation and mitigation strategies:

- Alerting and Incident Response: Generating high-fidelity alerts for detected AI threats and integrating with existing Security Information and Event Management (SIEM) systems for rapid incident response.

- Model Retraining and Hardening: Providing insights to developers on how to re-train or fine-tune models to be more resilient against identified threats. This might involve techniques like adversarial training.

- Data Sanitization: Recommending or implementing methods to cleanse or filter compromised training data.

- Access Control Enhancements: Strengthening authentication and authorization mechanisms around AI models and sensitive data.

- Runtime Protection: Implementing safeguards to protect deployed AI models from adversarial inputs and unauthorized modifications.

For example, if a specific adversarial vulnerability like those related to imperceptible perturbations were discovered (e.g., a theoretical vulnerability like CVE-2023-98765 related to a specific adversarial attack against a widely used ML framework, which would be identified by an AI security platform), remediation might involve applying specific input sanitization layers or retraining the model with adversarial examples to enhance its robustness. You can find more information on CVEs at CVE-2023-98765 (placeholder, this CVE is fictional).

Conclusion

Pillar Security is addressing a critical need in the evolving cybersecurity landscape. By focusing on the unique challenges of AI security and adopting a holistic, lifecycle-based approach, their platform aims to provide the necessary trust for organizations to confidently deploy and leverage AI technologies. As AI continues its pervasive integration into critical infrastructure and business processes, specialized solutions like Pillar Security will be indispensable in safeguarding these systems from novel and complex threats. Their emphasis on new detection methodologies, combined with comprehensive lifecycle coverage, positions them as a key player in building a more secure AI future.