Zero Trust + AI: Privacy in the Age of Agentic AI

Zero Trust + AI: Reimagining Privacy in the Age of Agentic AI

Privacy used to be a perimeter problem. We secured our digital assets with firewalls, access controls, and strict policies, believing that strong boundaries guaranteed safety. But what happens when the lines blur? When artificial intelligence systems become autonomous actors, interacting with sensitive data and critical systems without constant human oversight? The traditional fortress model of privacy is crumbling. In the era of agentic AI, privacy isn’t about control; it’s about trust. And trust, by its very nature, reveals itself when you aren’t looking.

The Evolution of Privacy: From Perimeter to Trust

For decades, cybersecurity frameworks emphasized layered defenses, permissions, and vigilant monitoring. Data resided within defined perimeters, protected by walls and locks. User identities were verified at the gate, and access was granted based on pre-approved policies. This model worked reasonably well when human interaction was the primary vector for data movement and system access.

However, the emergence of agentic AI fundamentally shifts this paradigm. Agentic AI refers to AI systems capable of pursuing goals, making decisions, and interacting with environments and other entities autonomously. These agents operate without continuous human intervention, learning, adapting, and even initiating actions. Think of AI-powered financial advisors executing trades, autonomous cybersecurity agents responding to threats, or intelligent assistants managing your smart home. Their interactions with data and systems are vastly different from a simple human login.

Where does privacy stand when an AI agent, not a human, is accessing, processing, and potentially sharing sensitive information? Control, in the traditional sense, becomes an illusion. This necessitates a pivot from a perimeter-centric view to one founded on continuous verification and explicit trust, a concept epitomized by Zero Trust.

Zero Trust: The Foundation for Agentic AI Privacy

The Zero Trust security model, built on the principle of “never trust, always verify,” becomes indispensable when grappling with agentic AI:

- Never Trust: Every request, whether from a human or an AI agent, is treated as untrusted until proven otherwise. No implicit trust is granted based on network location or prior permissions.

- Always Verify: All users (human or AI), devices, and applications must be authenticated and authorized continuously, with access granted on a least-privilege basis. This involves rigorous identity verification and context-aware access policies.

- Micro-segmentation: Networks are divided into smaller, isolated segments, limiting lateral movement for any compromised entity, including a rogue AI agent.

- Continuous Monitoring: All activity is logged and monitored for anomalies, allowing for real-time detection of unauthorized access or suspicious behavior by AI agents.

For agentic AI, Zero Trust translates to:

AI Identity and Credential Management: Each AI agent must have a distinct, verifiable identity, much like a human user. This identity is used for authentication against every resource.

- Attribute-Based Access Control (ABAC) for AI: Access decisions for AI agents are dynamically determined based on a multitude of attributes, including the agent’s purpose, current context, data sensitivity, and the specific resource being accessed. This goes beyond simple role-based access.

- Behavioral Analytics: AI agents’ normal operational patterns are baselined. Any deviations, such as an agent attempting to access data outside its defined operational scope, trigger alerts and potential revocation of access. This is particularly crucial for detecting malicious or erroneous AI behavior, rather than just human threats.

Privacy Implications and Challenges

While Zero Trust offers a robust framework, agentic AI introduces unique privacy challenges:

- Data Exfiltration by Design: An AI agent, designed to process and analyze vast datasets, intrinsically involves data movement. Ensuring this movement adheres to privacy regulations (GDPR, CCPA, etc.) and organizational policies is complex.

- “Black Box” Trust: The inner workings of complex AI models can be opaque. If an AI makes a decision that leads to a privacy breach, pinpointing the cause within a complex neural network is challenging. This relates to the concept of AI explainability.

- Autonomous Data Usage: An agentic AI might, in its pursuit of a goal, utilize data in ways not explicitly foreseen or approved at its inception. This dynamic use of data requires continuous consent management and auditing.

- The “Right to Be Forgotten” for AI: How do you ensure an AI agent truly “forgets” sensitive data it has processed, especially if that data has been incorporated into its learned model parameters? This is an active area of research in differentially private AI.

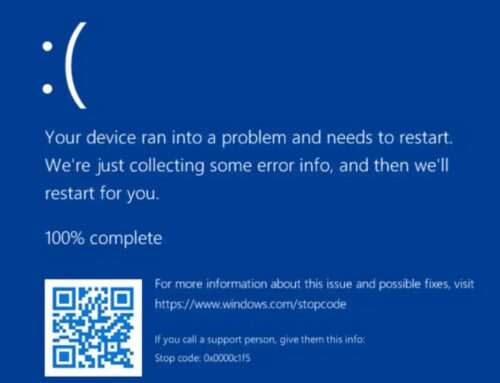

- Vulnerability to Adversarial Attacks (CVE Examples): AI models themselves can be targets. Adversarial attacks can subtly alter inputs to cause an AI to misclassify data or make erroneous decisions, potentially leading to privacy breaches. For instance, CVE-2023-38500 (a recent vulnerability in a popular AI framework) could theoretically be leveraged for data manipulation in certain contexts, albeit not directly a privacy breach on its own, it highlights the attack surface. While not a direct AI privacy CVE, exploits like CVE-2020-0796 (SMBGhost) could enable deep system access that, if an AI agent also had access, could lead to significant data compromise.

Remediation Actions for Safeguarding Privacy with Agentic AI

Securing privacy in an agentic AI ecosystem requires a multi-faceted approach:

- Implement a Comprehensive Zero Trust Architecture: This is non-negotiable. Extend Zero Trust principles to AI agents, their data interactions, and the systems they access.

- Robust AI Identity and Access Management (AI-IAM): Develop or adopt specialized IAM solutions that can authenticate and authorize AI agents with the same rigor (or more) as human users. Define granular, least-privilege roles for each agent’s purpose.

- Data Minimization and Anonymization: Train AI models and allow agents to access only the absolute minimum amount of data required for their task. Prioritize anonymized or synthetic data where possible.

- Principle of Least Privilege Applied to AI: Ensure AI agents only have permissions to execute specific tasks and access specific datasets that are absolutely necessary for their function, and nothing more.

- Continuous Auditing and Explainable AI (XAI): Implement robust logging of all AI agent activities. Utilize XAI techniques to understand and verify how AI decisions leading to data access or usage are made, enhancing accountability.

- Privacy-Preserving AI Techniques: Explore and deploy techniques like Federated Learning (training models on decentralized datasets without centralizing raw data), Homomorphic Encryption (performing computations on encrypted data), and Differential Privacy (adding noise to data to protect individual privacy).

- Regular Security Audits of AI Systems: Conduct periodic penetration testing and security assessments specifically targeting AI models and their operational environments to uncover vulnerabilities.

- Incident Response Plans for AI-Induced Breaches: Develop specific playbooks for detecting, containing, and remediating privacy incidents initiated by compromised or malfunctioning AI agents.

Relevant Tools for AI Security and Privacy Auditing

| Tool Name | Purpose | Link |

|---|---|---|

| IBM AI Explainability 360 | Understanding AI model decisions for transparency and auditing. | https://github.com/Trusted-AI/AIX360 |

| Microsoft Counterfit | Generating adversarial attacks against AI systems to test robustness. | https://github.com/microsoft/counterfit |

| IBM Adversarial Robustness Toolbox (ART) | Defending AI models against adversarial attacks and evaluating robustness. | https://github.com/Trusted-AI/adversarial-robustness-toolbox |

| OpenMMLab Opencompass | Comprehensive evaluation of large language models (LLMs) for safety and privacy. | https://github.com/open-compass/open-compass |

Conclusion: Building Trust in an Autonomous World

The rise of agentic AI marks a fundamental shift in how we conceive and protect privacy. It moves beyond fortress walls and static permissions, demanding a dynamic, continuously verified model of trust. Zero Trust provides the architectural blueprint, but its successful implementation in an AI-driven world requires deep understanding of AI’s unique characteristics, potential vulnerabilities, and an unwavering commitment to privacy-by-design. As AI agents become increasingly autonomous actors in our digital ecosystems, our ability to maintain privacy will hinge not on control, but on our capacity to build verifiable trust that stands strong, even when we’re not looking.